One of the main defenses used by those who excel at art generators with AI is that even though the models are trained on existing images, everything they create is new. Artificial intelligence missionaries often Compare these systems to real artists. The creators take inspiration from all those who came before them, so why not make the AI similar to the previous work?

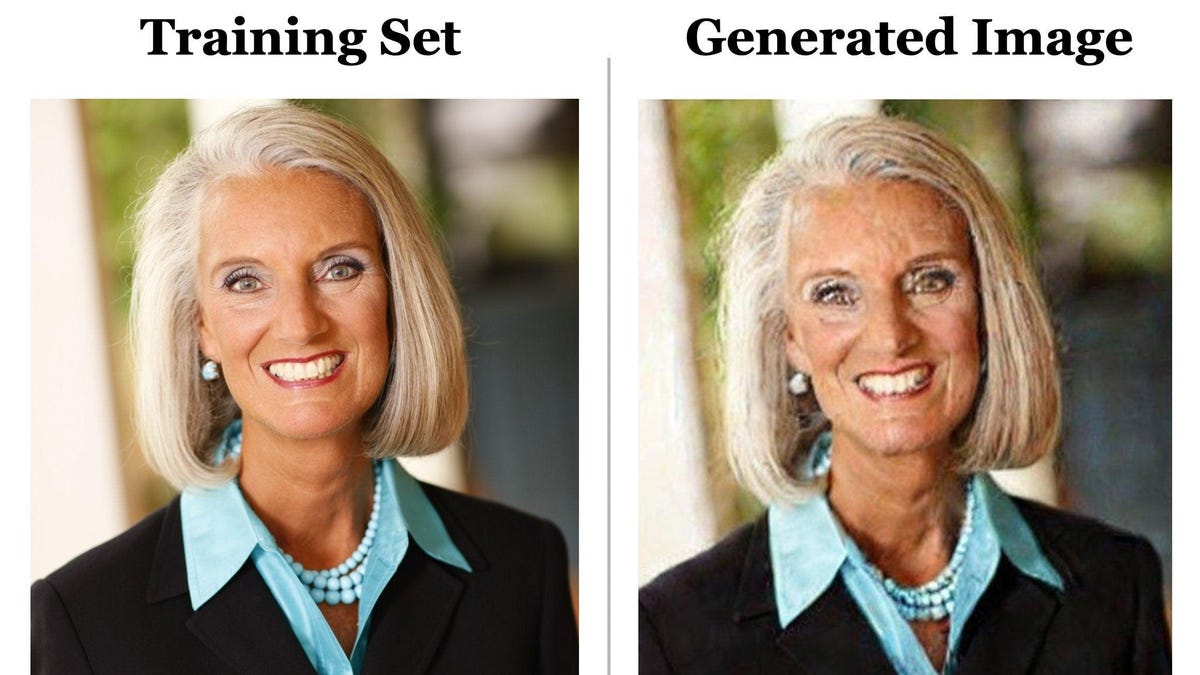

The new research could stymie that argument, and it might even become a major sticking point Several ongoing lawsuits related to AI-generated content and copyright. Researchers in both industry and academia have found that the most popular and upcoming AI image generators can “save” images from the data they’ve been trained on. Instead of creating something entirely new, some prompts will have the AI simply reproduce an image. Some of these recreated images may be copyrighted. But even worse, modern AI generative models have the ability to save and reproduce sensitive information collected for use in an AI training set.

studying Conducted by technology researchers – to be exact The Google and DeepMind – and at universities like Berkeley and Princeton. The same crew worked on previous study that identified a similar problem with AI language models, specifically GPT2, Introduction to OpenAI models ChatGPT is extraordinarily popular. Bringing the band back together, researchers led by Google Brain researcher Nicholas Carlini found that both Google’s Imagen and popular open source Stable Diffusion were able to reproduce the images, some of which had obvious copyright and licensing implications.

The first image in that tweet was created using the caption included in the Stable Diffusion dataset, the multi-terabyte fragmented image database known as LAION. The team fed the caption into the “Stable Diffusion” prompt, and they came out with exactly the same image, though slightly distorted by digital noise. The process of finding these duplicate photos was relatively simple. The team ran the same prompt several times, and after getting the same resulting image, the researchers manually checked whether the image was present in the training set.

G/O Media may earn a commission

Two of the researchers on the paper Eric Wallace, a PhD student at UC Berkeley, and Vikash Siwag, a PhD student at Princeton University, told Gizmodo in a Zoom interview that image replication is rare. Their team tried about 300,000 different captions, and found a memorization rate of just 0.3%. Duplicated images were much rarer for models such as Stable Diffusion that deduplicated images in their training set, although in the end all diffusion models will have the same problem, to a greater or lesser degree. The researchers found that Imagen was perfectly able to memorize images that only existed once in the dataset.

“The caveat here is that the model is supposed to generalize, and it is supposed to generate new images rather than spitting out a copy held in memory,” Sehwag said.

Their research shows that as AI systems get larger and more complex, the likelihood that AI will produce replicated material increases. A smaller model like Stable Diffusion simply doesn’t have the same amount of storage space to store most of the training data. that A lot could change in the next few years.

“Maybe next year, whatever new model comes out that’s a lot bigger and a lot more powerful, those kinds of conservation risks are probably going to be much higher than they are now,” Wallace said.

Through a complex process that involves destroying the training data with noise before removing the same deformation, diffusion-based machine learning models generate data — in this case, images — similar to what was trained on it. Diffusion models were an evolution of generative adversarial networks or GAN-based machine learning.

The researchers found that GAN-based models don’t have the same problem saving images, but it’s unlikely that major companies will move beyond Diffusion unless a more sophisticated machine learning model emerges that produces more realistic, higher-quality images.

Florian Trammer, a professor of computer science at ETH Zurich who was involved in the research, noted how many AI companies are advising users, whether in free or paid versions, to grant a license to share or even monetize AI-generated content. The AI companies themselves also reserve some rights to these images. This could be a problem if the AI creates an image that is an exact match of the existing copyright.

With a save rate of just 0.3%, AI developers can look at this study and determine that there isn’t too much risk. Companies can work to remove image duplicates in training data, making it less likely to be saved. Hell, they could even develop AI systems that can detect if an image is a direct duplicate of an image in the training data and flag it for deletion. However, it hides the full privacy risk posed by generative AI. Carlini and Trammer also helped out Another recent paper To which I argued that even attempts to filter the data still didn’t prevent the training data from leaking through the model.

And, of course, there is a great risk that images that no one wants to reproduce will appear on users’ screens. Wallace asked if the researcher wanted to create a full set of synthetic medical data for x-rays of people, for example. What should happen if artificial intelligence based on diffusion saved And duplicate a person’s actual medical records?

“It’s very rare, so you might not notice it’s happening at first, and then you can actually publish that dataset to the web,” said the UC Berkeley student. “The goal of this work is to kind of get ahead of those potential kinds of mistakes that people might make.”

“Infuriatingly humble web fan. Writer. Alcohol geek. Passionate explorer. Evil problem solver. Incurable zombie expert.”

/cdn.vox-cdn.com/uploads/chorus_asset/file/24722319/Georgia_mug.jpeg)