In 2024, it has become easier to create realistic images of people generated by artificial intelligence, leading to concerns about how these deceptive images will be detected. Researchers at the University of Hull Recently revealed A new method for detecting fake images generated by artificial intelligence by analyzing reflections in human eyes. The technique was presented in National Astronomical Meeting of the Royal Astronomical Society Last week, the tools astronomers use to study galaxies were adapted to scrutinize the consistency of light reflections in the eyeballs.

Adegumoke Owolabi, a Masters student at the University of Hull, led the research under the supervision of Dr. Kevin PimblettProfessor of Astrophysics.

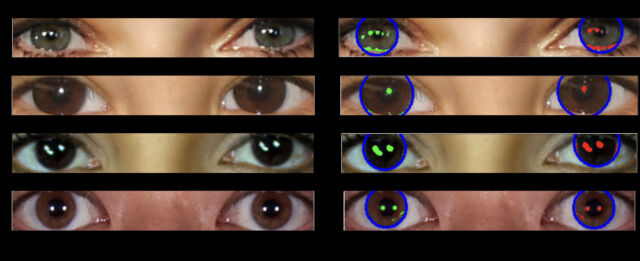

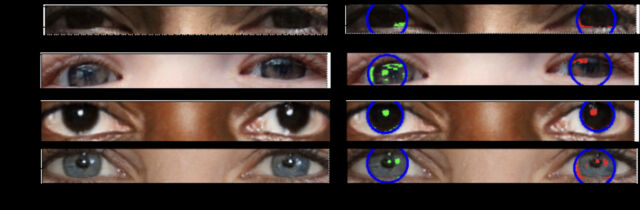

Their detection technique is based on a simple principle: A pair of eyes illuminated by the same set of light sources typically has a similarly shaped set of light reflections in each eye. Many AI-generated images so far don’t take eye reflections into account, so the simulated light reflections are often inconsistent between each eye.

In some ways, the astronomical angle isn’t always necessary for this kind of deepfake detection because a quick look at a pair of eyes in the image can reveal reflection inconsistencies, which is Artists who paint portraits But applying astronomy tools to automatically measure and quantify eye reflections in fake videos is a new development.

Automated detection

In the Royal Astronomical Society Blog In an article for The Verge , Pimblett explained that Owolabi developed a technique to automatically detect eyeball reflections, running the morphological features of the reflections through indicators to compare the similarity between the right and left eyeballs. Their findings revealed that fake videos often show differences between the two eyes.

The team applied methods from astronomy to measure and compare the eyeball’s reflections. They used Gini coefficientusually used for Measuring the distribution of light in galaxy imagesTo assess the consistency of reflections across the pixels of the eye. A Gini value close to 0 indicates that light is distributed evenly, while a value close to 1 indicates that light is concentrated in a single pixel.

In the Royal Astronomical Society paper, Pimblett made comparisons between how the shape of an eyeball’s reflection is measured and how the shape of a galaxy is typically measured in telescope images: “To measure the shapes of galaxies, we analyze whether they are centrally compact, whether they are symmetrical, and how smooth they are. We analyze the distribution of light.”

Researchers also explored the use of CAS parameters (Focus, Asymmetry, Smoothness), another astronomical tool for measuring the distribution of galactic light. However, this method has proven less effective in identifying false eyes.

Arms race in detection

While eye reflection technology offers a potential path to detecting AI-generated images, this approach may not work if AI models evolve to include physically accurate eye reflections, and may be implemented as a post-image step. This technique also requires a clear, close-up view of the eyeballs to work.

This approach also risks producing false positives, as even original images can sometimes show inconsistent eye reflections due to varying lighting conditions or post-processing techniques. But eye reflection analysis could still be a useful tool in a larger deepfake detection toolkit that also takes into account other factors such as hair texture, anatomy, skin details, and background consistency.

While the technique is promising in the short term, Dr Pimblett cautioned that it is not perfect. “There are false positives and false negatives; it will not catch everything,” he told the Royal Astronomical Society. “But this method gives us a baseline and a plan of attack in the arms race to detect fake recordings.”

“Explorer. Unapologetic entrepreneur. Alcohol fanatic. Certified writer. Wannabe tv evangelist. Twitter fanatic. Student. Web scholar. Travel buff.”