OpenAI released a new feature on Thursday,System card“ChatGPT’s new GPT-4o AI model details the model’s limitations and safety testing procedures. Among other examples, the document reveals that in rare cases during testing, the model’s advanced voice mode imitated users’ voices without permission. Currently, OpenAI has safeguards in place to prevent this from happening, but this case reflects the increasing complexity of designing a safe AI chatbot that can imitate any voice from a small clip.

Advanced Voice Mode is a feature of ChatGPT that allows users to have voice conversations with the smart assistant.

In a section of the GPT-4o system sheet titled “Unauthorized Voice Generation,” OpenAI describes an episode where a noisy input somehow caused the model to suddenly mimic the user’s voice. “Voice generation can also occur in non-hostile situations, such as when we use this ability to generate voices for ChatGPT’s advanced voice mode,” OpenAI writes. “During testing, we also observed rare instances where the model inadvertently generated output that mimicked the user’s voice.”

In this example of unintended voice generation provided by OpenAI, the AI model shouts “No!” and continues the sentence in a voice that sounds like the “red team” we heard at the beginning of the clip. (A red team is someone a company hires to conduct a competitive test.)

It would certainly be creepy to talk to a machine and have it suddenly start talking to you in its own voice. OpenAI typically has safeguards in place to prevent this, which is why the company says the event was rare even before it developed ways to prevent it entirely. But the example prompted BuzzFeed data scientist Max Wolff to tweet“OpenAI just leaked the plot for the next season of Black Mirror.”

Voice prompt injection

How can OpenAI’s new model mimic sounds? The key evidence lies elsewhere in GPT-4o’s system card. To create sounds, GPT-4o can apparently synthesize any type of sound found in its training data, including sound effects and music (though OpenAI discourages this behavior with specific instructions).

As noted in the system card, the model can mimic essentially any voice based on a short audio clip. OpenAI drives this ability safely by providing a validated voice sample (for a hired voice actor) that it is asked to mimic. The sample is provided in the AI model’s system prompt (what OpenAI calls a “system message”) at the beginning of a conversation. “We supervise perfect completions using the voice sample in the system message as the base voice,” OpenAI writes.

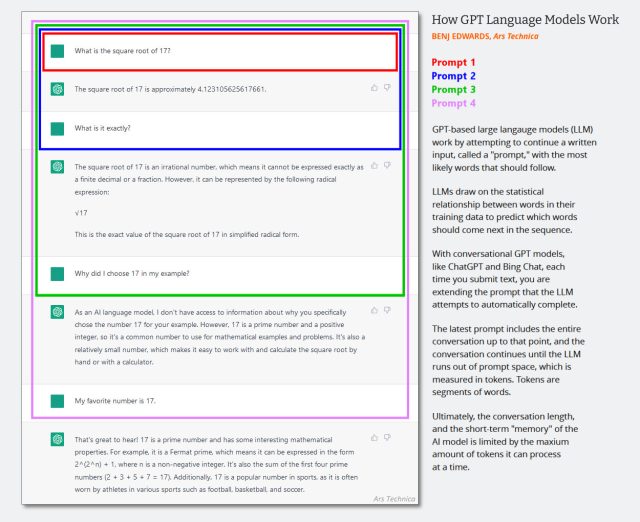

In text-only LLM programmes, the system message is displayed.A hidden set of text instructions that guide the behavior of the chatbot and are silently added to the conversation log before the conversation session begins. Successive interactions are appended to the same conversation log, and the entire context (often called a “context window”) is returned to the AI model each time a user provides new input.

(It might be time to update this diagram created in early 2023 below, but it shows how the context window works in an AI conversation. Just imagine that the first prompt is a system message saying things like “You are a helpful chatbot. You don’t talk about violence, etc.”)

Bing Edwards / Ars Technica

Since GPT-4o is multimodal and can process distinct audio, OpenAI can also use audio input as part of the model’s system prompt, which is what it does when OpenAI provides the model with an authorized audio sample to mimic. The company also uses another system to detect if the model is generating unauthorized audio. “We only allow the model to use predefined sounds, and use an output classifier to detect if the model deviates from that,” OpenAI writes.

“Web specialist. Lifelong zombie maven. Coffee ninja. Hipster-friendly analyst.”

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_image/image/72361882/FFXVI_MediaKit_01.0.jpg)