Since 2017, any free time I have (ha!), I've been helping my colleague Eric Berger host his Houston-area weather forecast site, Space city weather. It's an interesting hosting challenge — on a typical day, SCW probably delivers 20,000 to 30,000 page views to 10,000 to 15,000 unique visitors, a relatively easy load to handle with minimal work. But when severe weather events occur — especially in the summer, when hurricanes lurk in the Gulf of Mexico — site traffic can soar to more than a million page views within 12 hours. This level of traffic requires more preparation to handle.

Lee Hutchinson

For a very long time, I ran SCW on a HAProxy To terminate SSL, Varnish stash For temporary storage on the box, and Nginx For the actual web server application – it's all on top Cloudflare To accommodate the majority of the load. (I wrote about this setup at length on Ars a few years ago for people who want more in-depth details.) This cluster was fully battle-tested and ready to absorb any traffic we directed at it, but it was also annoyingly complex, with multiple cache layers that had to be installed. Dealing with it, and this complexity made troubleshooting more difficult than I would have liked.

So, during the winter downtime a couple of years ago, I took the opportunity to eliminate some of the complexity and reduce my hosting stack to one monolithic web server application: OpenLiteSpeed.

Get rid of the old and bring in the new

I didn't know much about OpenLiteSpeed (“OLS” to its friends) other than that it was mentioned a lot in discussions about WordPress hosting – and since SCW runs WordPress, I was starting to get interested. OLS seems to get a lot of praise for its integrated caching, especially when it comes to WordPress; It was meant to be very fast Compared to Nginx; Honestly, after five years of running the same stack, I was interested in turning things around. It was OpenLiteSpeed!

Lee Hutchinson

The first important adjustment to deal with was that OLS was essentially configured through the actual GUI, with all the potentially annoying issues that come with it (another port to secure, another password to manage, another public point of entry to the backend, and more PHP resources intended only for the management interface). But the GUI was fast, and mostly revealed the settings that needed to be revealed. Translating my existing Nginx WordPress configuration to OLS-speak was a good acclimatization exercise, and I eventually settled on that Cloudflare Tunnels As an acceptable way to keep the Admin console hidden and theoretically secure.

Lee Hutchinson

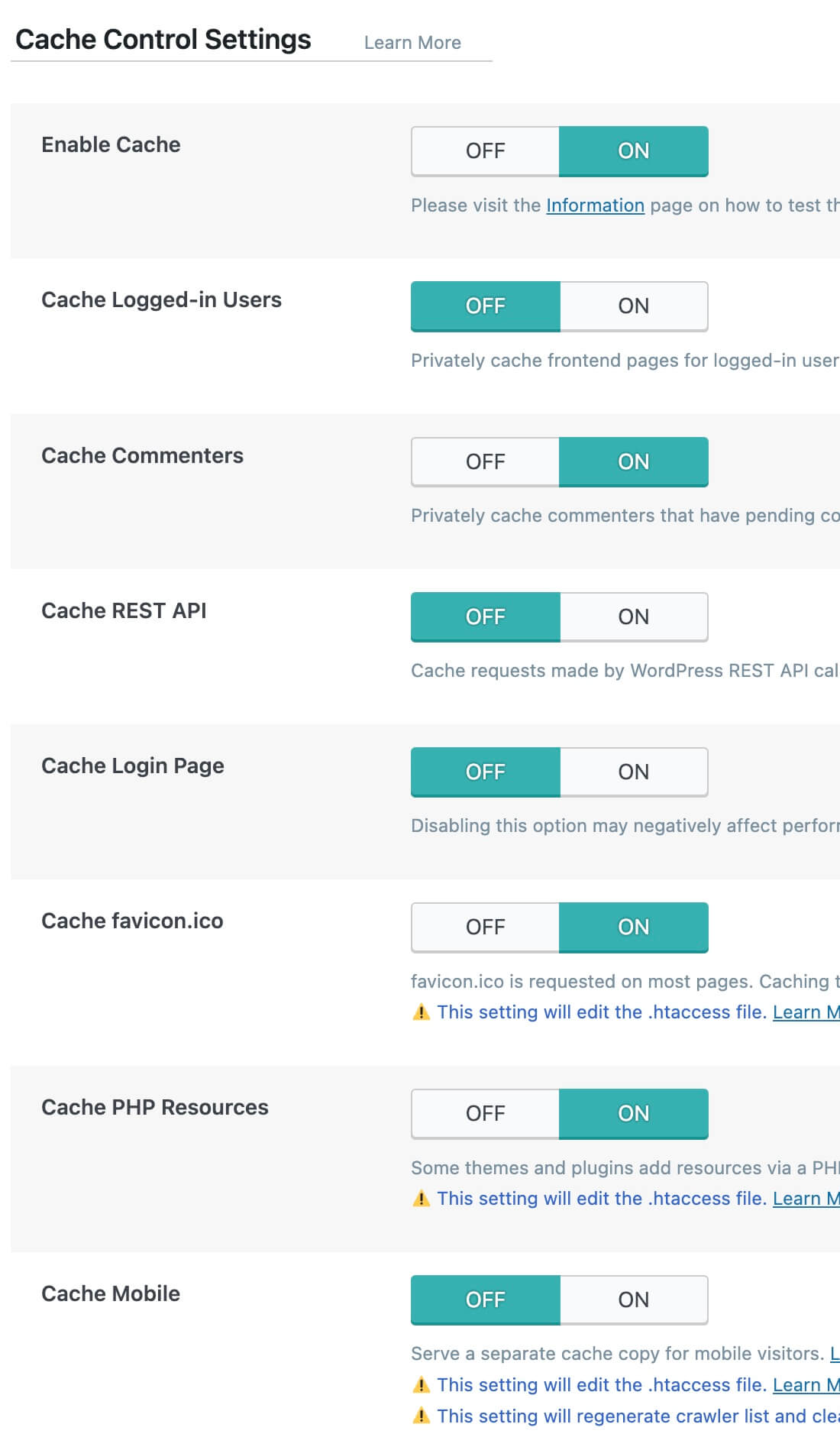

The other major adjustment was OLS LiteSpeed Cache plugin For WordPress, it is the primary tool one uses to configure how WordPress itself interacts with OLS and its built-in cache. It is a huge plugin that comes with Pages and pages of configurable optionsMany of them are interested in driving and taking advantage of Quic.Cloud CDN service (Run by LiteSpeed Technology, the company that created OpenLiteSpeed and its paid sibling, Lightspeed).

Getting the most out of WordPress on OLS means spending some time on the plugin, and knowing which options would help and which would hurt. (Perhaps unsurprisingly, there are plenty of ways you could get yourself into a fair amount of trouble by being too aggressive with caching.) Fortunately, Space City Weather provides a great proving ground for web servers, being a site that's well active with Large cache. – Workload friendly, so I came up with an initial configuration that I was reasonably happy with as we spoke Words of ancient sacred rituals, flip the switch. HAProxy, Varnish and Nginx stopped, and OLS took over the loading.

“Web specialist. Lifelong zombie maven. Coffee ninja. Hipster-friendly analyst.”